Keypoint-Augmented Self-Supervised Learning for Medical Image Segmentation with Limited Annotation

Zhangsihao Yang, Mengwei Ren, Kaize Ding, Guido Gerig, Yalin Wang

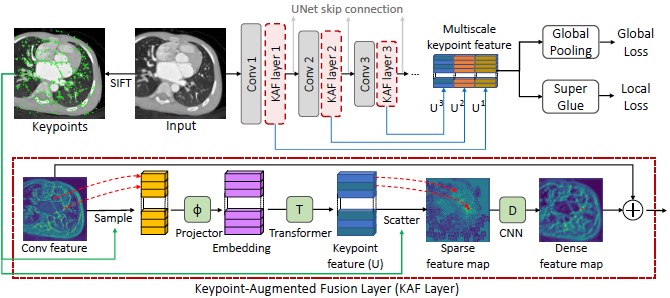

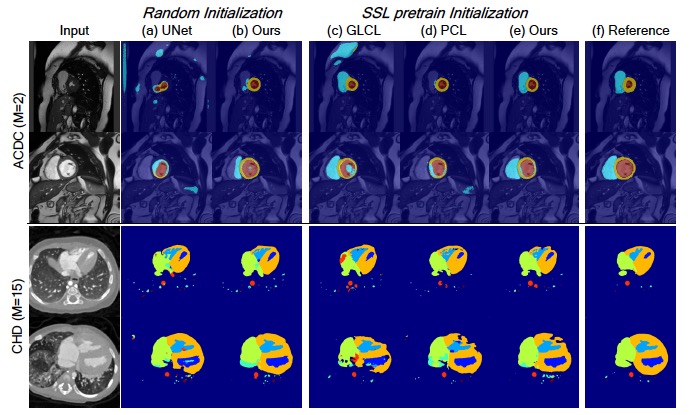

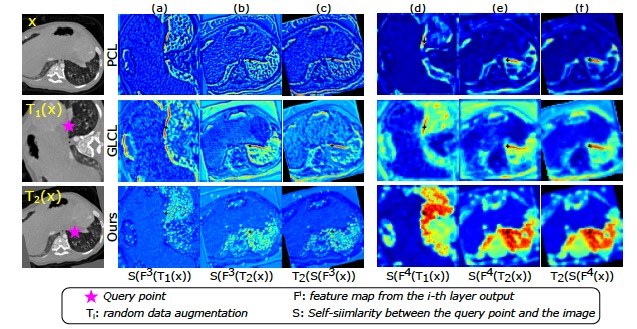

Pretraining CNN models (i.e., UNet) through self-supervision has become a pow2erful approach to facilitate medical image segmentation under low annotation3 regimes. Recent contrastive learning methods encourage similar global repre4sentations when the same image undergoes different transformations, or enforce5 invariance across different image/patch features that are intrinsically correlated.6 However, CNN-extracted global and local features are limited in capturing long7range spatial dependencies that are essential in biological anatomy. To this end, we8 present a keypoint-augmented fusion layer that extracts representations preserving9 both short- and long-range self-attention. In particular, we augment the CNN10 feature map at multiple scales by incorporating an additional input that learns long11range spatial self-attention among localized keypoint features. Additionally, we12 introduce both global and local self-supervised learning algorithms to pretrain the13 framework without using human-annotated labels. At the global scale, we perform14 a global pooling of the keypoint features across different scales to obtain a global15 representation, which is utilized for a global contrastive objective. At the local16 scale, we define a distance-based criterion to first establish correspondences among17 keypoints and encourage similarity between their features. Through experiments18 conducted on two cardiac datasets, we demonstrate the architectural advantages of19 our proposed method compared to the CNN-only UNet architecture, when both20 architectures are trained with randomly initialized weights. With our proposed21 pretraining strategy, our method outperforms existing SSL methods by producing22 more robust self-attention and achieving state-of-the-art segmentation results.