Congratulations to Yanxi Chen, Xuanzhao Dong, and Xin Li on the acceptance of their papers by ISBI 2025!

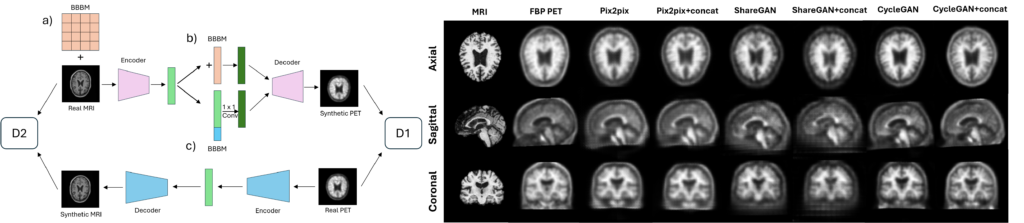

Plasma-CycleGAN: Plasma Biomarker-Guided MRI to PET Cross-modality Translation Using Conditional CycleGAN

Yanxi Chen, Yi Su, Celine Dumitrascu, Kewei Chen, David Weidman, Richard J Caselli, Nicholas Ashton, Eric M Reiman, Yalin Wang

Summary: In this work, we propose Plasma-CycleGAN, a novel generative model based on CycleGAN, to synthesize PET images from MRI using BBBMs as conditions. This is the first approach to integrate BBBMs in conditional cross-modality translation between MRI and PET.

TPOT: Topology Preserving Optimal Transport in Retinal Fundus Image Enhancement

Xuanzhao Dong, Wenhui Zhu, Xin Li, Guoxin Sun, Yi Su, Oana M. Dumitrascu, Yalin Wang

Summary: Retinal fundus photography enhancement is important for diagnosing and monitoring retinal diseases. However, early approaches to retinal image enhancement, such as those based on Generative Adversarial Networks (GANs), often struggle to preserve the complex topological information of blood vessels, resulting in spurious or missing vessel structures. The persistence diagram, which captures topological features based on the persistence of topological structures under different filtrations, provides a promising way to represent the structure information.

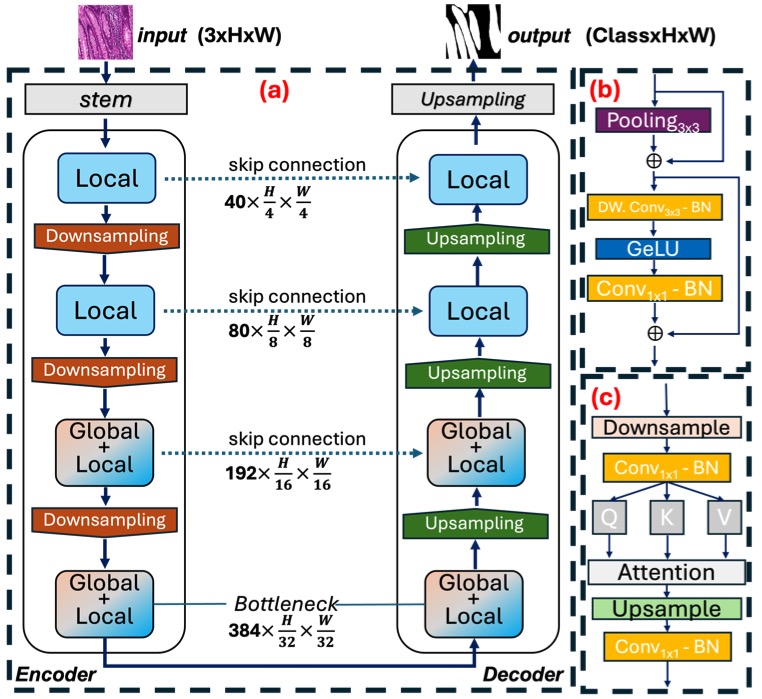

EViT-Unet: U-Net Like Efficient Vision Transformer for Medical Image Segmentation on Mobile and Edge Device

Xin Li, Wenhui Zhu, Xuanzhao Dong, Oana M. Dumitrascu, Yalin Wang

Summary: With the rapid development of deep learning, CNN-based U-shaped networks have succeeded in medical image segmentation and are widely applied for various tasks. However, their limitations in capturing global features hinder their performance in complex segmentation tasks. The rise of Vision Transformer (ViT) has effectively compensated for this deficiency of CNNs and promoted the application of ViT-based U-networks in medical image segmentation.