Deep Representation Learning for Multimodal Brain Networks

Wen Zhang, Liang Zhan, Paul Thompson, and Yalin Wang

Abstract

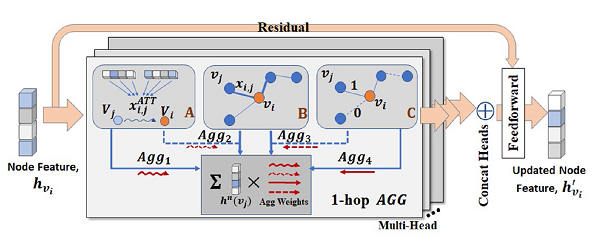

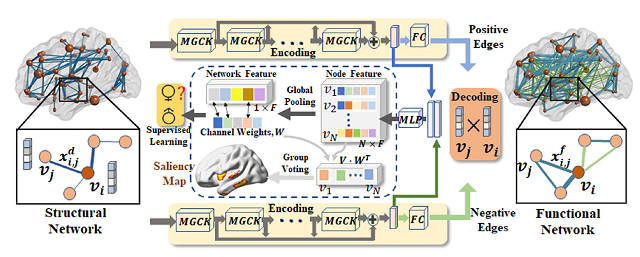

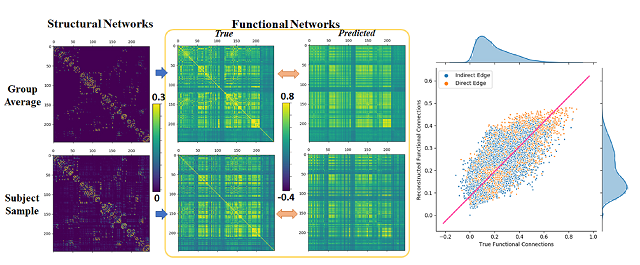

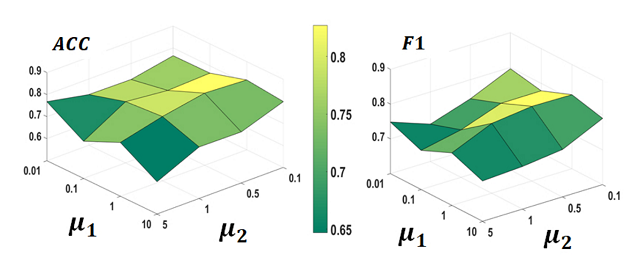

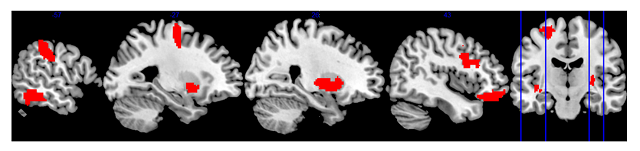

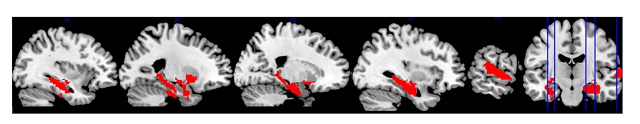

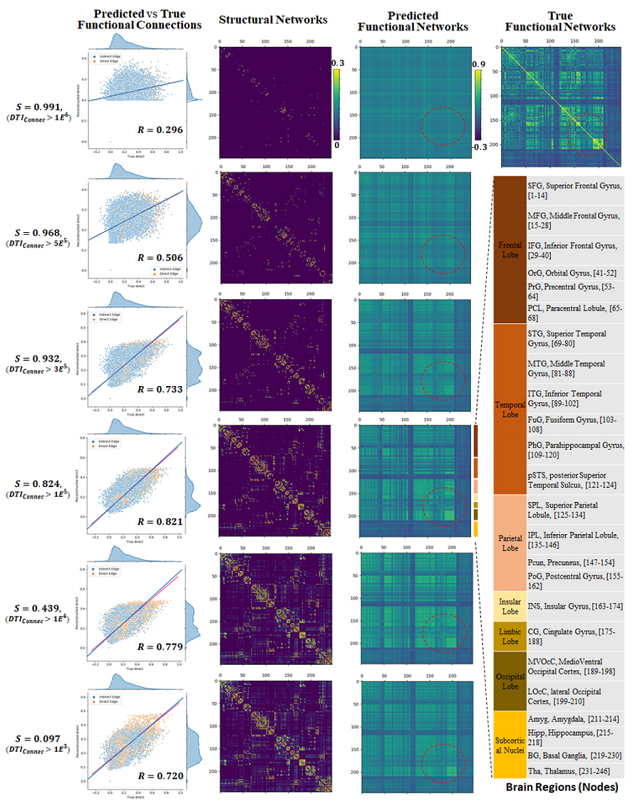

Applying network science approaches to investigate the functions and anatomy of the human brain is prevalent in modern medical imaging analysis. Due to the complex network topology, for an individual brain, mining a discriminative network representation from the multimodal brain networks is non-trivial. The recent success of deep learning techniques on graph-structured data suggests a new way to model the non-linear cross-modality relationship. However, current deep brain network methods either ignore the intrinsic graph topology or require a network basis shared within a group. To address these challenges, we propose a novel end-to-end deep graph representation learning (Deep Multimodal Brain Networks – DMBN) to fuse multimodal brain networks. Specifically, we decipher the cross-modality relationship through a graph encoding and decoding process. The higher-order network mappings from brain structural networks to functional networks are learned in the node domain. The learned network representation is a set of node features that are informative to induce brain saliency maps in a supervised manner. We test our framework in both synthetic and real image data. The experimental results show the superiority of the proposed method over some other state-of-the-art deep brain network models.

Figures (click on each for a larger version):

Related Publications

- Zhang W, Wang Y,, “Deep Multimodal Brain Network Learning For Joint Analysis of Structural Morphometry and Functional Connectivity”, In IEEE International Symposium on Biomedical Imaging: From Nano to Macro (ISBI), Apr. 2020